A data engineer is building a robust pipeline to process customer feedback. They need to extract specific sentiment categories (food_quality, food_taste, wait_time, food _cost) from text reviews and ensure the output is always a valid JSON object matching a predefined schema, even for complex reviews. They also want to control the determinism of the LLM responses.

Which of the following SQL statements or considerations are correct for achieving this using Snowflake Cortex AI functions?

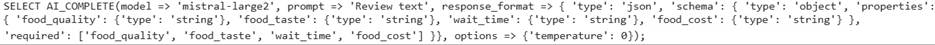

A. The following SQL statement uses the response_format argument and temperature setting to achieve structured output and determinism:

B. The response_format argument with a JSON schema is primarily for OpenAl (GPT) models; for other models like Mistral, a strong prompt instruction such as 'Respond in strict JSON' is generally more effective.

C. To ensure the model explicitly attempts to extract all specified fields, the 'required' array in the JSON schema is critical; AI_COMPLETE will raise an error if any required field cannot be extracted.

D. Using AI_COMPLETE with response_format incurs additional compute cost for the overhead of verifying each token against the supplied JSON schema, in addition to standard token costs.

E. For the most consistent structured output, especially in complex reasoning tasks, setting the temperature option to 0 when calling AI_COMPLETE is recommended.

Explanation:

Option A is correct because it demonstrates the proper use of the 'AI_COMPLETE function with the ‘response_format’ argument to specify a JSON schema and sets ‘temperature’ to 0 for consistent output, as per the documentation.

Option C is correct as the "required'’ field in the JSON schema ensures that specific fields must be extracted, and 'COMPLETE (or 'AI_COMPLETE) will raise an error if these fields cannot be found.

Option E is correct because for the most consistent results, setting the ‘temperature* option to O is recommended when calling 'COMPLETE (or "AI_COMPLETE) with structured outputs, regardless of the task or model.

Option B is incorrect because all models supported by support structured output, and specifying the ‘response_format’ is the direct mechanism to enforce a schema, although for complex tasks, adding 'Respond in JSON' to the prompt can improve accuracy.

Option D is incorrect as 'AI_COMPLETE Structured Outputs incurs compute cost based on the number of tokens processed, but it does not incur additional compute cost for the overhead of verifying each token against the supplied JSON schema.